Chanced upon this interview in the SOLiD community but the thread was missing two days after. Oh well here's the

google cache link

P.S. if there's a valid reason why it was taken off please inform me, will do the same..

Update:

the page is up again

Recently we had the chance to talk with

Frank You from the Department of Plant Sciences at U. C. Davis and the Genomics and Gene Dsicovery Research Unit of the USDA-ARS about his publication in

BMC Genomics,

Annotation-based genome-wide SNP discovery in the large and complex Aegilops tauschii genome using next-generation sequencing without a reference genome sequence.*

You are discovering genome wide SNPs in plant species. What is the reason you want to discover these SNPs?

Genome-wide SNPs are important resources for marker-assisted selection in breeding and high-dense genetic mapping construction which is required for map-based cloning and whole genome sequencing. In our study, we discovered genome-wide SNPs in

Aegilops tauschii, the diploid ancestor of the wheat D genome, with a genome size of 4.02 Gb, of which 90% is repetitive sequences. We are using these SNPs to construct a high-dense genetic map for wheat D genome sequencing.

Please briefly explain the challenges you faced, and the solution you came to, in order to do this in the absence of a reference.

We have no

reference sequences available for

Ae. tauschii SNP discovery. For short reads generated by next-generation sequencing platforms, especially SOLiD™ and Solexa, the major challenge is mapping errors, when short reads in one genotype are mapped to short reads in another genotype in highly repetitive, complex genomes. Our idea is to reduce the complexity of the genome.

It is assumed that most genes are in a single-copy dose in a genome, and sequences of duplicated genes are usually diverged to such an extent that most of their reads do not cluster together. Therefore, the read depth (number of reads of the same nucleotide position) mapped to coding sequences of known genes estimates the expected read depth of all single-copy sequences in a genome. Sequences showing greater read depth are assumed to be from duplicated or repeated sequences. To implement this rationale, shallow genome coverage by long Roche 454 sequences is used to identify genic sequences by homology search against gene databases. Multiple genome coverages of short SOLiD™ or Solexa sequences are then used to estimate the read depth of genic sequences in a population of SOLiD™ or Solexa reads. The estimate is in turn used to identify (annotate) the remaining single-copy Roche 454 reads.

This combination of Roche 454 and SOLiD™ or Solexa platforms combines the long length of Roche 454 reads with the high coverage of the SOLiD™/Solexa sequencing platforms, thus reducing costs associated with the development of

reference sequence. Short SOLiD™ or Solexa reads are mapped and aligned to the Roche 454 reads or contigs with short-read mapping tools. After the annotation of all sequences, SNPs are called and filtered.

An important part of your pipeline is the ability to call SNPs in repeat junctions. What is this, and why is it important?

Transposable elements (TE) make up large proportions of many eukaryotic genomes. For example, they represent ~35% of the rice genome, and ~90% of the hexaploid wheat genome, and significantly contribute to the size, organization and evolution of plant genomes. Repeat junctions (RJs) are created by insertions of TEs into each other, into genes, or into other DNA sequences. Previous studies showed that those repeat junctions are commonly unique and genome-specific. They can be therefore treated as single copy markers in the genome.

The genome specificity of TE junction-based markers makes them particularly useful for mapping of polyploid species including many important crops, such as wheat and cotton. Because repeat junctions are also abundant and randomly distributed along chromosomes, they have a great potential in development of genome-wide molecular markers for high-throughput mapping and diversity studies in large and complex genomes.

In this paper you used both base space and color space data. Did you find any challenges with mixing these data types?

We had difficulty using base space and color space data together in read mapping. No academic command-line-based programs for hybrid read mapping are currently available. Thus, this is still a challenge for hybrid data mapping. Instead, we can perform short read mapping separately for color-space and base-space data, and then merge the results in the pipeline.

Given the highly repetitive sequence you were working with, will this method work even better with less repetitive genomes?

Yes. I can expect that the method proposed in this paper will work even better with less repetitive genomes.

*Annotation-based genome-wide SNP discovery in the large and complex

Aegilops tauschii genome using next-generation sequencing

without a

reference genome sequence.

You FM, Huo N, Deal KR, Gu YQ, Luo MC, McGuire PE, Dvorak J, Anderson OD

BMC Genomics 2011, 12:59 (25 January 2011)

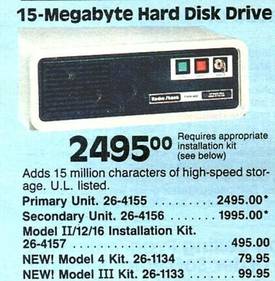

YEAR -- Price of a Gigabyte1981 -- $300,000

YEAR -- Price of a Gigabyte1981 -- $300,000