Ah storage, who doesn't need more of it? Cheaply I might add.

The folks at

Backblaze published their own field report on HDD failure rate which is interesting for any data center.

Earlier I had read about Google's study on how temperature doesn't affect HDD failure rate and promptly removed the noisy HDD cooling fans in my Linux box.

Their latest blog post at

http://blog.backblaze.com/2013/11/12/how-long-do-disk-drives-last/ has me thinking that some of my colleagues elsewhere that are doing Backblaze like setups should switch to consumer grade HDDs to save on cost.

I do have a 80 Gb Seagate HDD that has survived the years. Admittedly I am not sure what to do with it anymore as it is too small(80 Gb) to be useful and too big (3.5") to be portable. It was used as a main HDD until it's size rendered it obselete hence it's sitting in a USB HDD dock that I use occasionally.

maybe you can find out the age by looking up the serial number but I use the SMART data info that you can see from the Disk Utility in Ubuntu.

|

| My ancient 3.5" HDD |

However the age as you can see from the screen shot is an estimate of the days it has been powered on.

|

| Powered on only for 314 days! |

Hmm pretty low mileage for a 80 Gb HDD eh?

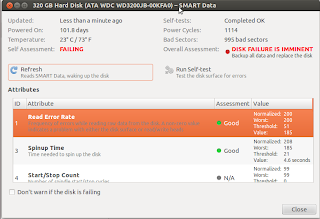

Check out this 320 Gb IDE HDD

Even lower mileage! Not too sure of the history of this drive so can't really comment here.

Completely anecdotal but I have 3 Seagate 1 Tb HDD dying within a year from a software 5x HDD RAID array from within CentOS. When I checked on the powered on days it says it has been running for 3 years. So I am

1) confused how SMART data records HDD age

2) in agreement with Backblaze that HDD have specific failure phases. (where usage patterns play less of a role perhaps)

3)guessing that most of the data on Backblaze are archival in nature i.e. write once and forget until disaster strikes. So it would be great if Backblaze can 'normalize' the lifespan of the HDD with data access patterns per HDD to make it more relevant for a crowd that has a slightly different usage pattern than pure data archival needs.

That said I think it's an excellent piece of reading if you are concerned about using consumer grade HDD. Kudos the Backblaze team who managed to 'shuck' 5.5 Petabytes of raw HDD to weather the Thailand crisis (wonder how that affected their economics of using consumer grade HDD)

As usual YMMV applies here. Feel free to use consumer grade HDD for your archival needs but be sure to build in redundancy and resilience into your system like the folks in Backblaze.

0%

0%